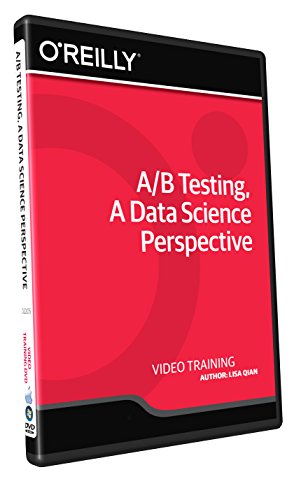

A/B Testing, A Data Science Perspective – Training DVD

This post contains affiliate links. As an Amazon Associate I earn from qualifying purchases Number of Videos: 1.25 hours – 9 lessons Ships on: DVD-ROM User Level: Beginner Works On: Windows 7,Vista,XP- Mac OS X Deciding whether or not to launch a new product or feature is a resource management bet for any Internet business.

This post contains affiliate links. As an Amazon Associate I earn from qualifying purchases

Number of Videos: 1.25 hours – 9 lessons

Ships on: DVD-ROM

User Level: Beginner

Works On: Windows 7,Vista,XP- Mac OS X

Deciding whether or not to launch a new product or feature is a resource management bet for any Internet business. Conducting rigorous online A/B tests flattens the risk. Drawing on her experience at Airbnb, data scientist Lisa Qian offers a practical ten-step guide to designing and executing statistically sound A/B tests. – Discover best practices for defining test goals and hypotheses – Learn to identify controls, treatments, key metrics, and data collection needs – Understand the role of appropriate logging in data collection – Determine how to frame your tests (size of difference detection, visitor sample size, etc.) – Master the importance of testing for systematic biases – Run power tests to determine how much data to collect – Learn how experimenting on logged out users can introduce bias – Understand when cannibalization is an issue and how to deal with it – Review accepted A/B testing tools (Google Analytics, Vanity, Unbounce, among others) Lisa Qian focuses on search and discovery at Airbnb. She has a PhD in Applied Physics from Stanford University.

Product Features

- Learn A/B Testing, A Data Science Perspective from a professional trainer from your own desk.

- Visual training method, offering users increased retention and accelerated learning

- Breaks even the most complex applications down into simplistic steps.

- Easy to follow step-by-step lessons, ideal for all

This post contains affiliate links. As an Amazon Associate I earn from qualifying purchases